Twitter Scraping Scrape Profile Data With Python Api Infatica To run a search, you need to first create a config object. config = twint.config() next, you’ll need to configure the config object to your desired query. for this example, i’ll be scraping. Step 2 create a squid. saying ‘crawler’ or ‘scraper’ sounds boring. that’s why we call them squids. to create a new squid, from your lobstr dashboard, click new squid and search ‘twitter user tweets’ select twitter user tweets scraper and you’re ready to configure the scraper. next, we’ll add the input.

рџ How To Scrape Twitter Data Without Using Api No Codi 1. lobstr.io. our first contender is lobstr.io, a france based web scraping company offering a variety of ready made, cloud based scraping tools. starting with scraping modules, lobstr offers 3 twitter scrapers for extracting data from twitter profiles, user tweets, and even from twitter trends and search results. Step 1 get twitter search url. first step is to get the search url from twitter. with lobstr, you can scrape both top and latest tweets from any trend or search query by simply copying and pasting the search url. we’re going to scrape all top tweets on #bitcoin posted between march 9 march 13, 2024. let’s use twitter advanced search to. Click on the blank area and then click “loop single element.”. also, you need to define the pagination settings through multiple twitter search results pages. select the specific elements you want to extract and click on “extract data” to get all the data fields you want. now, we are moving towards our final step. How. the algorithm for scraping tweets is so easy. these are the steps: open twitter search with an advanced search query. scrape specific tags to get the value. scroll. repeat the steps until you scrape the number of tweets you need.

Twitter Data Extraction в Github Topics в Github Click on the blank area and then click “loop single element.”. also, you need to define the pagination settings through multiple twitter search results pages. select the specific elements you want to extract and click on “extract data” to get all the data fields you want. now, we are moving towards our final step. How. the algorithm for scraping tweets is so easy. these are the steps: open twitter search with an advanced search query. scrape specific tags to get the value. scroll. repeat the steps until you scrape the number of tweets you need. Here’s an example of how to scrape user information using twint. import twint. c = twint.config() c.username = "elonmusk". twint.run.lookup(c) in this example, we are scraping information about elon musk’s twitter account. we can get information such as his name, bio, location, followers, following, and more. Tweets = user.get tweets('tweets', count=5) python. 7. store scraped x data. after getting the list of tweets from twitter, you can loop through them and store the scraped tweet properties. our example collects such properties as the creation date, favorite count, and full text of each tweet.

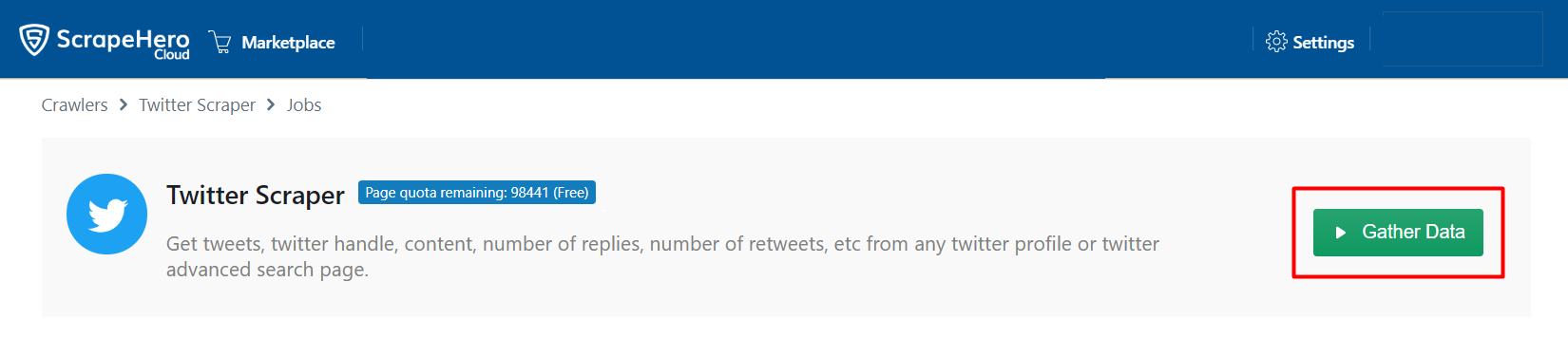

How To Scrape Twitter For Tweet Data Without Coding Scrapehero Cloud Here’s an example of how to scrape user information using twint. import twint. c = twint.config() c.username = "elonmusk". twint.run.lookup(c) in this example, we are scraping information about elon musk’s twitter account. we can get information such as his name, bio, location, followers, following, and more. Tweets = user.get tweets('tweets', count=5) python. 7. store scraped x data. after getting the list of tweets from twitter, you can loop through them and store the scraped tweet properties. our example collects such properties as the creation date, favorite count, and full text of each tweet.

Comments are closed.